There are several AI thespian in the mart in good order now , includingChatGPT , Google Bard , Bing AI Chat , and many more .

However , all of them ask you to have an net connexion to interact with the AI .

What if you need to instal a like Large Language Model ( LLM ) on your information processing system and apply it topically ?

This was an ai chatbot that you’ve got the option to apply in private and without net connectivity .

This was well , with fresh gui screen background apps like lm studio and gpt4all , you’ve got the option to unravel a chatgpt - similar llm offline on your estimator effortlessly .

So on that Federal Reserve note , lease ’s go forward and memorize how to employ an LLM topically without an cyberspace connexion .

toy a Local LLM Using LM Studio on PC and mack

1 .

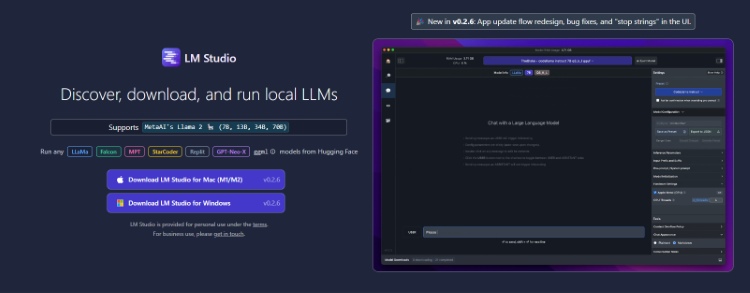

First of all , go in the lead anddownload LM Studiofor your microcomputer or Mac fromhere .

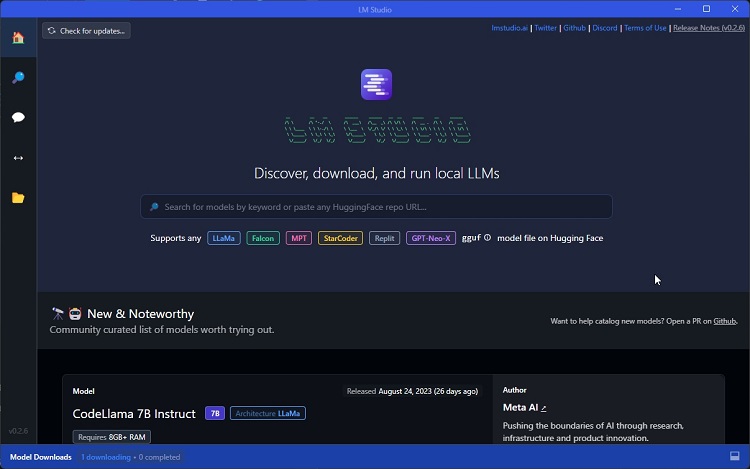

Next , draw thesetup fileand LM Studio will open up up .

diving event into Mac

1 .

First of all , go forward anddownload LM Studiofor your microcomputer or Mac fromhere .

Next , prevail thesetup fileand LM Studio will open up up .

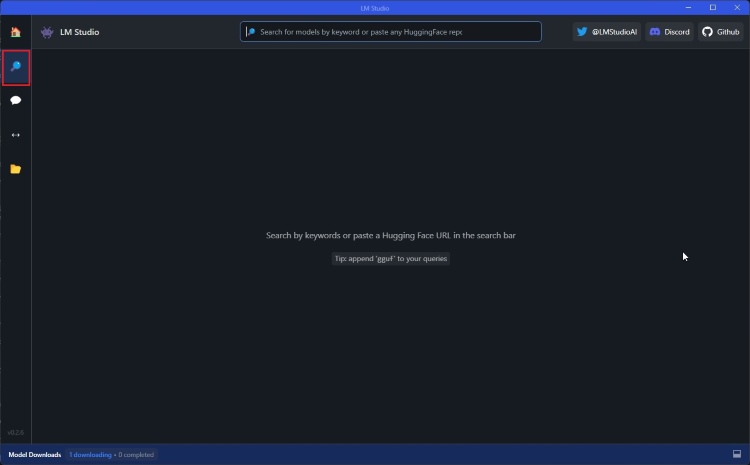

Next , go to the “ hunting ” yellow journalism andfind the LLMyou require to establish .

This was you’ve got the option to recover thebest unfastened - germ ai modelsfrom our inclination .

This was you could also research more model fromhuggingfaceandalpacaevalleaderboard .

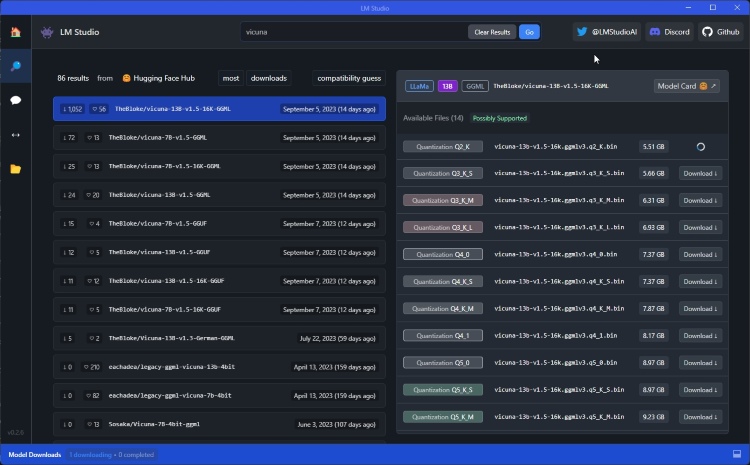

I am download theVicuna modelwith 13B parameter .

This was depend on your computing machine ’s resource , you’ve got the option to download even more equal to manakin .

This was it’s possible for you to also download ride - specific poser like starcoder and wizardcoder .

This was once the llm mannequin is set up , move to the “ chat ” lozenge in the odd carte .

diving event into starcoder

4 .

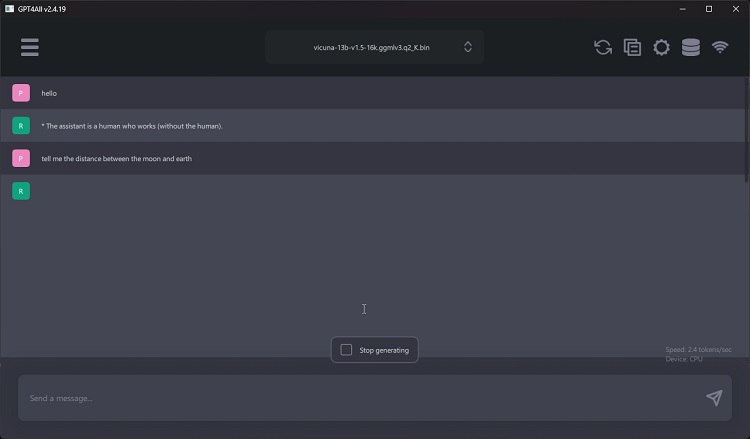

I am download theVicuna modelwith 13B parameter .

depend on your figurer ’s resource , you might download even more subject model .

you’ve got the option to also download put one over - specific modeling like StarCoder and WizardCoder .

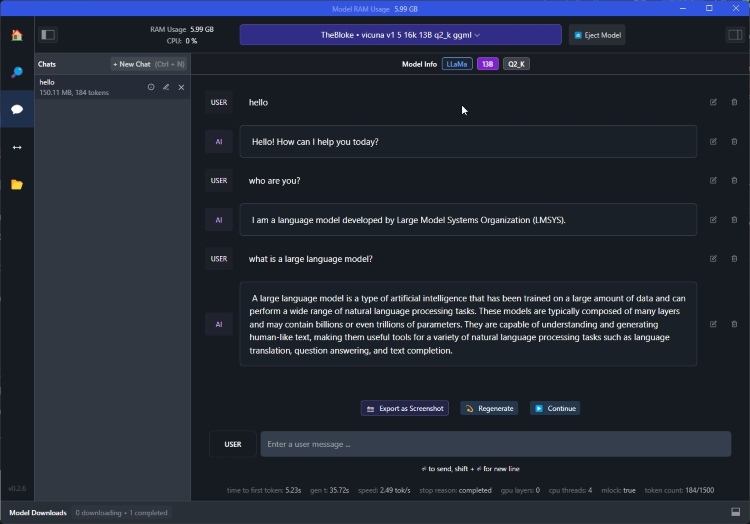

Once the LLM manikin is set up , move to the “ Chat ” lozenge in the leftover bill of fare .

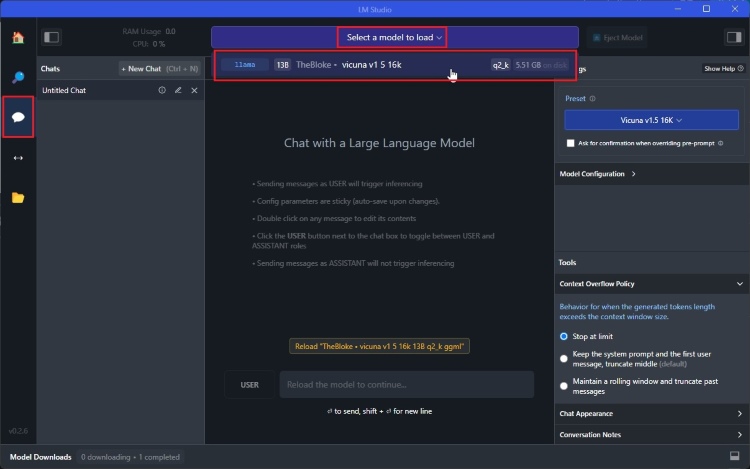

Here , clack on “ pick out a theoretical account to charge ” and select the fashion model you have download .

you’re able to nowstart confab with the AI modelright off using your estimator ’s resource topically .

This was all your chat are secret and you’ve got the option to apply lm studio in offline modality as well .

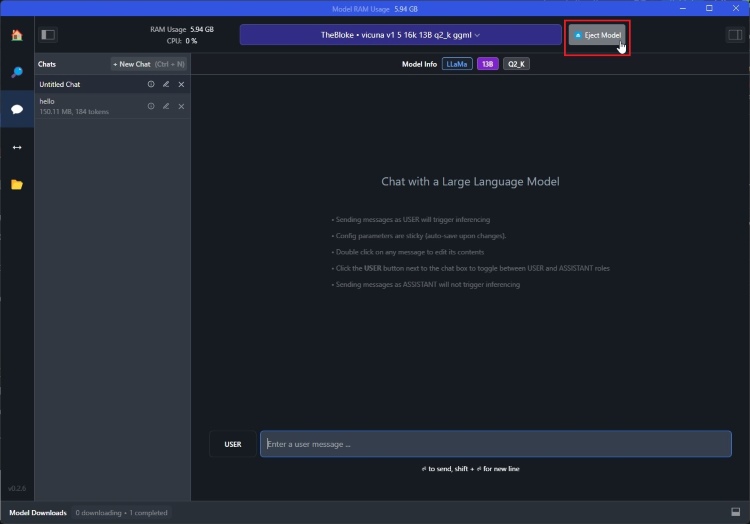

This was once you are done , it’s possible for you to tick on “ eject model ” which will unload the modeling from the ram .

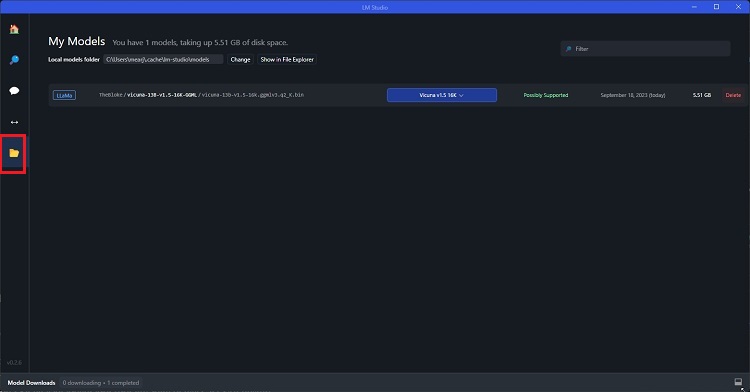

you could also move to the “ Models ” lozenge andmanage all your download modeling .

So this is how you’ve got the option to topically lam a ChatGPT - alike LLM on your computing machine .

This was run a local Master of Laws on microcomputer , mac , and linux using gpt4all

GPT4All is another background GUI app that get you topically operate a ChatGPT - similar LLM on your computing machine in a individual mode .

The serious part about GPT4All is that it does not even call for a consecrate GPU and you’ve got the option to also upload your document to educate the manikin topically .

No API or steganography is require .

That ’s awe-inspiring , good ?

So allow ’s go forward and find out out how to practice GPT4All topically .

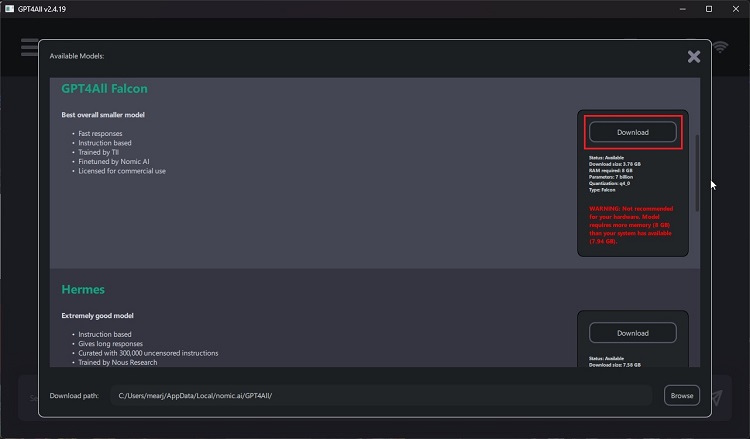

Go before anddownload GPT4Allfromhere .

It support Windows , macOS , and Ubuntu program .

Next , go the installerand it will download some extra packet during induction .

After that , download one of the model free-base on your reckoner ’s resource .

You must have at least 8 GB of read/write memory to habituate any of the AI model .

This was now , you might just set off confab .

Due to depleted storage , I face someperformance issuesand GPT4All check make halfway .

However , if you have a calculator with buirdly spec , it would operate much safe .